Process Lidar Datasets

In this tutorial we are going to learn how to use pralin/compose to process lidar data stored in a kDB dataset. We will see how to iterate over the lidar data frames, combines them in a new dataset and then how to downsample the point cloud.

We will use a kDB store for the purpose of that tutorial, lets start it with:

kdb store --path tuto_kdb_store --extension kDBSensing

We will use the dataset of lidar data acquired near the buildings of the Gränsö castle. It can be downloaded and uncompressed with the following command:

curl -o lidar_buildings.kdb_dataset.xz "https://gitlab.liu.se/lks/tutorials_data/-/raw/main/lidar_buildings.kdb_dataset.xz?ref_type=heads&inline=false"

xz -d lidar_buildings.kdb_dataset.xz

The dataset can be imported into the database using the following command:

kdb datasets import --path tuto_kdb_store --filename lidar_buildings.kdb_dataset

Combine lidar frames into a single point cloud

This composition will query a database for a 3D lidar scans (for instance, from a velodyne/ouster sensor). It will combine the scans into a single point cloud, and write it down in a file:

compose:

parameters:

kdb_host: !required null

kdb_port: 1242

source_dataset_uri: !required null

output_filename: !required null

process:

# This create a connection to the kDB database, according to the parameter `kdb_host`

# and `kdb_port`

- kdb/create_connection_handle:

id: connection

parameters:

host: !param kdb_host

port: !param kdb_port

# This start a query for a lidar 3D scan dataset, using the connection and the dataset

# specified in parameters

- kdb/sensing/query_lidar3d_scan_dataset:

id: qid

inputs: ["connection[0]"]

parameters:

query: !param source_dataset_uri

# Create a transformation provider using the proj library for handling geographic

# transformations.

- proj/create_transformation_provider:

id: ctp

# This loop over the scans in the dataset

- for_each:

id: iterate_scan

iterator: qid[0]

process:

# This accumulate the points, note that the first input is initialized

# with a `default` point cloud, which is an empty point cloud, and then

# it is connected to the output of combine for iteratively building the

# point cloud

- pcl/combine:

id: combine

inputs: [ [default, "combine[0]"], "iterate_scan[0]", "ctp[0]" ]

# This will output the point cloud to a file

- pcl/pcd_writter:

inputs: [ !param output_filename, "combine[0]"]

When using the Gränsö dataset, and the tuto_kdb_store database, the composition can be started with:

pralin compose --parameters "{ kdb_host: 'tuto_kdb_store', source_dataset_uri: 'http://askco.re/examples#lidar_granso', output_filename: 'test.pcd' }" --

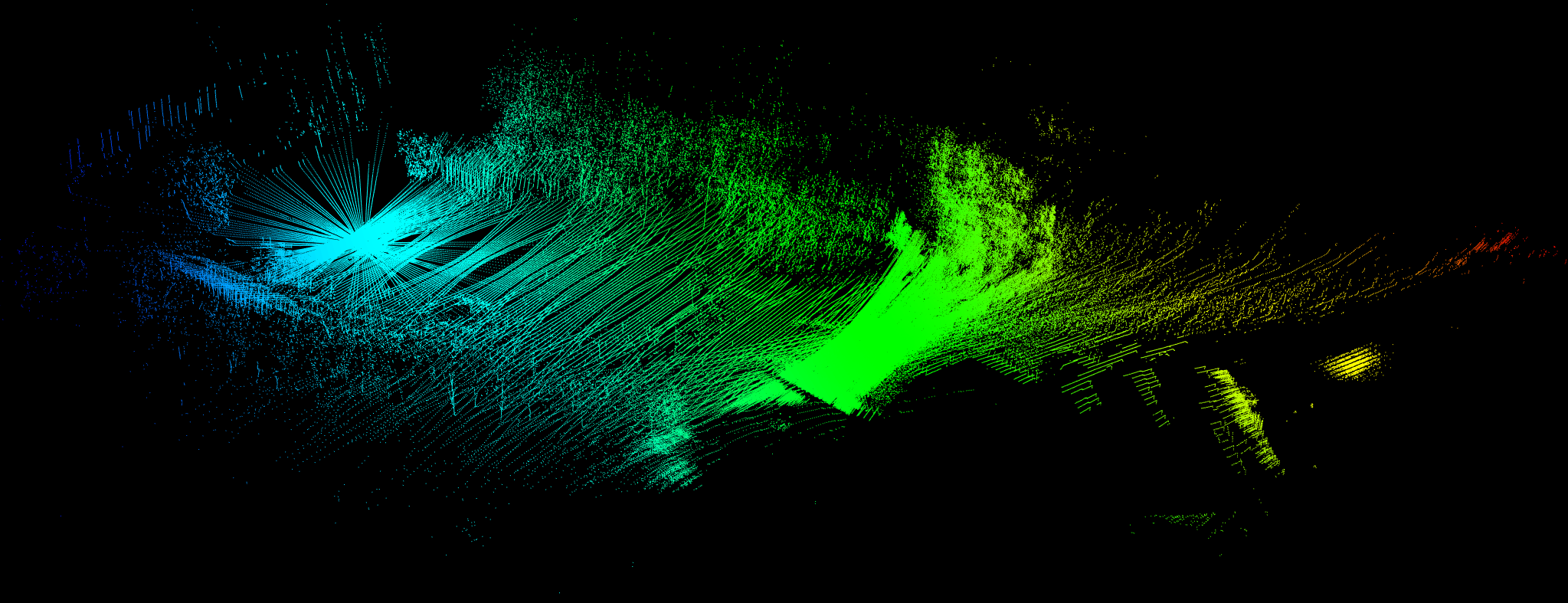

After running the composition, the file can be visualized with pcl_viewer (from the pcl-tools package):

pcl_viewer test.pcd

This should lead to an image similar to:

Downsample point cloud

This composition is similar to the previous one, but it includes a downsampling step:

compose:

parameters:

kdb_host: !required null

kdb_port: 1242

source_dataset_uri: !required null

output_filename: !required null

process:

# This create a connection to the kDB database, according to the parameter `kdb_host`

# and `kdb_port`

- kdb/create_connection_handle:

id: connection

parameters:

host: !param kdb_host

port: !param kdb_port

# This start a query for a lidar 3D scan dataset, using the connection and the dataset

# specified in parameters

- kdb/sensing/query_lidar3d_scan_dataset:

id: qid

inputs: ["connection[0]"]

parameters:

query: !param source_dataset_uri

# Create a transformation provider using the proj library for handling geographic

# transformations.

- proj/create_transformation_provider:

id: ctp

# This loop over the scans in the dataset

- for_each:

id: iterate_scan

iterator: qid[0]

process:

# This accumulate the points, note that the first input is initialized

# with a `default` point cloud, and we connect to the downsampler for

# optimization purposes.

- pcl/combine:

id: combine

inputs: [ [default, "downsample[0]"], "iterate_scan[0]", "ctp[0]" ]

# Downsample the point cloud to not contain more than 1 pts per 1m boxes.

- pcl/downsample:

id: downsample

inputs: ["combine[0]"]

parameters:

x: 1

y: 1

z: 1

# This will output the point cloud to a file

- pcl/pcd_writter:

inputs: [ !param output_filename, "downsample[0]"]

Store the point cloud in the database

In this composition, instead of saving the resulting point cloud in a file. We will save it as a new dataset in the kDB store:

compose:

parameters:

kdb_host: !required null

kdb_port: 1242

source_dataset_uri: !required null

destination_dataset_uri: !required null

process:

# This create a connection to the kDB database, according to the parameter `kdb_host`

# and `kdb_port`

- kdb/create_connection_handle:

id: connection

parameters:

host: !param kdb_host

port: !param kdb_port

# Query the source dataset

- kdb/datasets/get:

id: source_dataset

inputs: ["connection[0]"]

parameters:

query: !param source_dataset_uri

# Create the dataset, as a subdataset from the source dataset, we use a source

# dataset, and will reuse the geometry defined by the source.

# However, we need to change the type from `http://askco.re/sensing#lidar3d_scan`

# to `http://askco.re/sensing#point_cloud`.

# We specify as parameter `destination_dataset_uriW.

- kdb/datasets/create:

inputs: ["connection[0]", "source_dataset[0]"]

parameters:

uri: !param destination_dataset_uri

type: http://askco.re/sensing#point_cloud

# This start a query for a lidar 3D scan dataset, using the connection and the dataset

# specified in parameters

- kdb/sensing/query_lidar3d_scan_dataset:

id: qid

inputs: ["connection[0]"]

parameters:

query: !param source_dataset_uri

# Create a transformation provider using the proj library for handling geographic

# transformations.

- proj/create_transformation_provider:

id: ctp

# This loop over the scans in the dataset

- for_each:

id: iterate_scan

iterator: qid[0]

process:

# This accumulate the points, note that the first input is initialized

# with a `default` point cloud, and we connect to the downsampler for

# optimization purposes.

- pcl/combine:

id: combine

inputs: [ [default, "downsample[0]"], "iterate_scan[0]", "ctp[0]" ]

# Downsample the point cloud to not contain more than 1 pts per 1m boxes.

- pcl/downsample:

id: downsample

inputs: ["combine[0]"]

parameters:

x: 1

y: 1

z: 1

- kdb/sensing/insert_point_cloud_in_dataset:

inputs: [ "connection[0]", "downsample[0]"]

parameters:

query: !param destination_dataset_uri